Cloud data co-location prototype

The cloud data co-location use case is a very interesting one. Potentially the biggest reasons why organizations choose not to move a workload to the cloud include:

- compliance / regulatory requirements

- lock-in risks and difficulty/costs of migrating to another public/private cloud

- unpredictable and/or high costs

Last year I participated in a project that studied the feasibility of implementing "cloud data co-location". This basically consists on hosting a traditional storage array in a datacenter that is physically close to a public cloud and connect to it through a high-throughput low-latency link. Some providers like Equinix in APJ host one (or sometimes many) public clouds in their datacenters and offer a "direct-connect" link to these clouds. This allows you to leverage all the compute offerings from the public cloud while retaining control of your data outside (but near to) the cloud

Since the data is already sitting outside the cloud there is less risk of lock in, and certainly no astronomical egress charges. It is also much easier to satisfy regulatory requirements. Finally, costs can be lower than storage offerings from the cloud itself specially when high performance is required

In this article you can see the conclusions of the project, but in summary it provides better performance than the public cloud and it can make sense for many use cases. One important observation from our tests was that NAS protocols (NFS and CIFS) provide better performance than iSCSI

Recently, with a few colleagues from APJ, we created a group of Infrastructure-as-Code enthusiasts and we gave ourselves the name of IaC Avengers. As a foundational project we all run a two-day hackathon whose objective was to build a prototype for the cloud data colo use case. We referred to it as "Project Nebula"

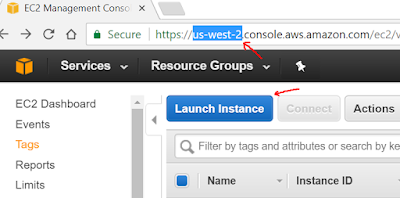

For this we used Ansible with a very simple web frontend. The idea was to create a VM on any cloud, including vSphere (whether VMC or on-prem) and then provision and NFS share and automate the mounting on the VM. We also worked on day-2 operations such as adding storage to an existing VM. From a storage perspective we provided options for DellEMC Unity and for VxFlex (ScaleIO).

In this video you can see a demo of the prototype in action for vSphere with Unity and VxFlex.

Comments

Post a Comment