AWS Lambda hands-on with Python

Serverless and Function-as-a-Service are not new concepts but they have become very popular since AWS launch Lambda in 2015. The objective of this post is to provide you with some guidance so you can experience it for yourself. So we will do a very quick introduction and explore the pros and cons and jump straight into the hands-on.

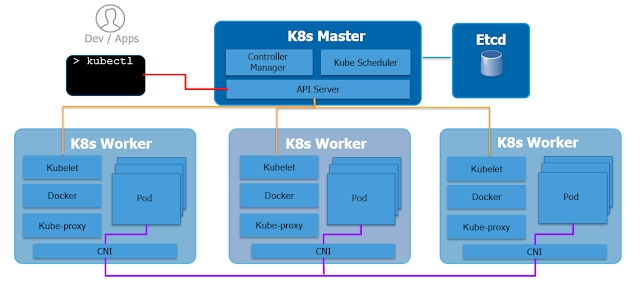

The idea is that you create a function on your language of choice which is then run on a container when triggered by an event. The word serverless comes from the fact that you only worry about the code, ie you don't have to manage the server, the container, the virtual machine or whatever it is running on. From that point of view PaaS is "serverless" as well, hence Function-as-a-Service is perhaps a better term. The difference between PaaS and FaaS is that with PaaS your unit of deployment is the full microservice (which is likely to contain several functions), whereas in FaaS it is only a function.

FaaS leverages the speed at which containers can be spun up. Basically then an event triggers the function, the FaaS platform spins up a stateless container and it destroys it as soon as it completes the execution. Spinning up containers is fast but it will not be fast enough for applications that are very latency sensitive.

With PaaS the recommendation is for your microservices to be stateless although you can still theoretically store things for a while, but with FaaS, there is no choice. Furthermore FaaS providers set a maximum running time of a few minutes for a function. If the function runs for longer than that it will be forcefully terminated.

Scaling out in FaaS is super easy. Your function scales out and back transparently based on demand without the need for you do anything. But that's also the reason why FaaS providers have to keep functions from running for too long, otherwise too many function invocations running for too long might result in a DoS attack.

FaaS relies on events to trigger the functions. For example AWS Lambda supports triggers like S3, API Gateway, DynamoDB, Kinesis streams, etc. This looks very convenient but it is also poses a big risk of lock-in. This is the problem Pivotal is trying to tackle with PFS (Pivotal Function Service) which is based on Open Source project Riff (scheduled for later this year). The idea is to allow functions to be portable between clouds, in the same way that Cloud Foundry has done for cloud native applications

How do you choose? In general the recommendation is to select the deployment method with the highest level of abstraction that meet your requirements. This ensures you benefit from the highest operational efficiencies. But after playing for a while with FaaS I don't see much operational benefits when compared to FaaS. In fact logging and monitoring are substantially harder with FaaS. My personal preference is to leverage FaaS when the workload is expected to have uneven load. Then FaaS becomes very cost efficient, but if your workload sees rather uniform load FaaS will be more more expensive.

Thanks for putting up with me this long. To start with the hands-on, I recommend you start with the "hello-world" from AWS themselves and then I can take you a bit further.

https://docs.aws.amazon.com/lambda/latest/dg/get-started-create-function.html

When a function is trigger it would be nice to some details about the event that triggered it, so that we can customize the behaviour of the function. This "event metadata" is going to be dependent of the type of the event. This page shows examples for the various events in the AWS ecosystem. As you can see they are in JSON format, so they are easy to parse

http://docs.aws.amazon.com/lambda/latest/dg/eventsources.html

For example, let's say we want to trigger a function when a new photo is upload it into an S3 bucket. The function then will create a thumbnail. In this case we will have to know the name of the image that was just uploaded. Within the function we will have to parse the event JSON to extract the name:

Let's look now at a different challenge. The containers that Lambda uses are pre-created for each language. These containers have a large list of libraries already installed on them. The following link shows the libraries that your Python containers will have. As you can see it contains the whole Boto ecosystem which you need to interact with other AWS services, as well as many other common libraries.

https://gist.github.com/gene1wood/4a052f39490fae00e0c3

However no matter how many libraries are there, sooner or later you are going to need a different one. For example a nice use case for my functions is to schedule a few calls to REST API at periodic intervals. For that purpose, the Python container includes the "urllib3" library, but I prefer to work with the "requests" library, which is not there. The solution is to create a package and upload it. This package is a ZIP file that contains our function script and any libraries that our script requires

Let’s use as an example this very simple REST API that returns the current location of the International Space Station. We will use a very simple HTTP GET call that returns a JSON with the ISS coordinates.

Open Command Prompt and create a directory for the project

Now we are going to install the modules we require with the “-t” option, in this case the requests

Check the result

Create a script in the directory called “iss.py” and drop the following code:

Now with Windows Explorer go into the directory, select all files and send-to compressed folder. It is very important that you do this from the directory itself. Essentially when Lambda decompresses the file, your script must exist in the "root" folder, not in a subdirectory.

The idea is that you create a function on your language of choice which is then run on a container when triggered by an event. The word serverless comes from the fact that you only worry about the code, ie you don't have to manage the server, the container, the virtual machine or whatever it is running on. From that point of view PaaS is "serverless" as well, hence Function-as-a-Service is perhaps a better term. The difference between PaaS and FaaS is that with PaaS your unit of deployment is the full microservice (which is likely to contain several functions), whereas in FaaS it is only a function.

FaaS leverages the speed at which containers can be spun up. Basically then an event triggers the function, the FaaS platform spins up a stateless container and it destroys it as soon as it completes the execution. Spinning up containers is fast but it will not be fast enough for applications that are very latency sensitive.

With PaaS the recommendation is for your microservices to be stateless although you can still theoretically store things for a while, but with FaaS, there is no choice. Furthermore FaaS providers set a maximum running time of a few minutes for a function. If the function runs for longer than that it will be forcefully terminated.

Scaling out in FaaS is super easy. Your function scales out and back transparently based on demand without the need for you do anything. But that's also the reason why FaaS providers have to keep functions from running for too long, otherwise too many function invocations running for too long might result in a DoS attack.

FaaS relies on events to trigger the functions. For example AWS Lambda supports triggers like S3, API Gateway, DynamoDB, Kinesis streams, etc. This looks very convenient but it is also poses a big risk of lock-in. This is the problem Pivotal is trying to tackle with PFS (Pivotal Function Service) which is based on Open Source project Riff (scheduled for later this year). The idea is to allow functions to be portable between clouds, in the same way that Cloud Foundry has done for cloud native applications

How do you choose? In general the recommendation is to select the deployment method with the highest level of abstraction that meet your requirements. This ensures you benefit from the highest operational efficiencies. But after playing for a while with FaaS I don't see much operational benefits when compared to FaaS. In fact logging and monitoring are substantially harder with FaaS. My personal preference is to leverage FaaS when the workload is expected to have uneven load. Then FaaS becomes very cost efficient, but if your workload sees rather uniform load FaaS will be more more expensive.

Thanks for putting up with me this long. To start with the hands-on, I recommend you start with the "hello-world" from AWS themselves and then I can take you a bit further.

https://docs.aws.amazon.com/lambda/latest/dg/get-started-create-function.html

When a function is trigger it would be nice to some details about the event that triggered it, so that we can customize the behaviour of the function. This "event metadata" is going to be dependent of the type of the event. This page shows examples for the various events in the AWS ecosystem. As you can see they are in JSON format, so they are easy to parse

http://docs.aws.amazon.com/lambda/latest/dg/eventsources.html

For example, let's say we want to trigger a function when a new photo is upload it into an S3 bucket. The function then will create a thumbnail. In this case we will have to know the name of the image that was just uploaded. Within the function we will have to parse the event JSON to extract the name:

Let's look now at a different challenge. The containers that Lambda uses are pre-created for each language. These containers have a large list of libraries already installed on them. The following link shows the libraries that your Python containers will have. As you can see it contains the whole Boto ecosystem which you need to interact with other AWS services, as well as many other common libraries.

https://gist.github.com/gene1wood/4a052f39490fae00e0c3

However no matter how many libraries are there, sooner or later you are going to need a different one. For example a nice use case for my functions is to schedule a few calls to REST API at periodic intervals. For that purpose, the Python container includes the "urllib3" library, but I prefer to work with the "requests" library, which is not there. The solution is to create a package and upload it. This package is a ZIP file that contains our function script and any libraries that our script requires

Let’s use as an example this very simple REST API that returns the current location of the International Space Station. We will use a very simple HTTP GET call that returns a JSON with the ISS coordinates.

Open Command Prompt and create a directory for the project

mkdir testfunction

Now we are going to install the modules we require with the “-t” option, in this case the requests

pip install requests -t testfunction

Check the result

C:\testfunction>dir Volume in drive C is Windows Volume Serial Number is E25C-DC85 Directory of C:\testfunction 05/20/2018 02:06 PM <DIR> . 05/20/2018 02:06 PM <DIR> .. 05/20/2018 02:05 PM <DIR> certifi 05/20/2018 02:05 PM <DIR> certifi-2018.4.16.dist-info 05/20/2018 02:05 PM <DIR> chardet 05/20/2018 02:05 PM <DIR> chardet-3.0.4.dist-info 05/20/2018 02:06 PM <DIR> idna 05/20/2018 02:06 PM <DIR> idna-2.6.dist-info 05/20/2018 02:06 PM <DIR> requests 05/20/2018 02:06 PM <DIR> requests-2.18.4.dist-info 05/20/2018 02:05 PM <DIR> urllib3 05/20/2018 02:05 PM <DIR> urllib3-1.22.dist-info 0 File(s) 0 bytes 12 Dir(s) 68,236,562,432 bytes free

import requests def lambda_handler(event, context): response = requests.get("http://api.open-notify.org/iss-now.json") return response.content

Now with Windows Explorer go into the directory, select all files and send-to compressed folder. It is very important that you do this from the directory itself. Essentially when Lambda decompresses the file, your script must exist in the "root" folder, not in a subdirectory.

Now go to AWS Lambda and Create a function but this time select "upload the .ZIP file". Also make sure the “Handler” matches the name of the script and the handler function

After uploading, you can select “Edit code inline” to view and edit your code

Now when you test it you will see the coordinates of the ISS. If you wanted to run this at periodic intervals and send you an email you could use the "CloudWatch" to schedule it and "SNS" to send the notification.

I hope you enjoyed this little intro to Function-as-a-Service and AWS Lambda with Python.

I hope you enjoyed this little intro to Function-as-a-Service and AWS Lambda with Python.

Comments

Post a Comment